1 - The newly sighted fail to match seen with felt

Abstract

Would a blind subject, on regaining sight, be able to immediately visually recognize an object previously known only by touch? We addressed this question, first formulated by Molyneux three centuries ago, by working with treatable, congenitally blind individuals. We tested their ability to visually match an object to a haptically sensed sample after sight restoration. We found a lack of immediate transfer, but such cross-modal mappings developed rapidly.

Main

For Locke, Berkeley, Hume and other empiricists, a positive answer to the Molyneux question would confirm the existence of an innate idea, that there exists a priori an 'amodal' conception of space common to both senses. A negative answer would support the idea that its acquisition results from an experience-driven process of association between the senses. The answer to this question would have an important bearing on contemporary issues in neuroscience concerned with cross-modal identification and intermodal interactions

A few studies of cross-modal matching by neonates have reported that they are able to visually choose between two objects that they have previously felt only via touch, suggesting an innately available cross-modal mapping. These results, however, have proven hard to replicate. A number of attempts have been made to address the interaction between vision and tactual information, but the reports were loosely characterized and used objects of arbitrary complexity, without consideration of their visual discriminability. Given these caveats and methodological drawbacks, a definitive answer to the Molyneux question has remained elusive.

The critical conditions for testing the Molyneux question are as follows. Appropriate individuals must be recruited as participants: they should be congenitally blind, but treatable, and mature enough for reliable discrimination testing. A more subtle precondition is that both senses in question, touch and vision, must be independently functional after treatment. Molyneux probably presupposed that a newly sighted individual would have fully functional vision and touch, but an optically restored eye does not necessarily imply the functional ability to make full use of the visual signal. Indeed, this was an important concern surrounding early experimental attempts to address the Molyneux question. Thus, our tests used visuo-haptic stimuli that are appropriate to both the visual and haptic capabilities of the subject.

Patients who meet these criteria are extremely rare in western countries because the vast majority of cases of curable congenital blindness are detected in infancy and treated as early as possible. However, many congenitally blind children in developing countries often do not receive treatment despite having curable conditions because of inadequate medical services. A humanitarian and scientific effort to locate and treat these children has been undertaken under the auspices of Project Prakash ( http://www.ProjectPrakash.org/ ) and a small fraction of these individuals satisfied the requirements of our study.

Five subjects were recruited from Project Prakash for this study. Subjects YS (male, 8 years), BG (male, 17 years), SK (male, 12 years) and PS (male, 14 years) presented with dense congenital bilateral cataracts. Subject PK (female, 16 years) presented with bilateral congenital corneal opacities. Subjects received a comprehensive ophthalmological examination before and after treatment. Prior to treatment, subjects were only able to discriminate between light and dark, with subjects BG and PK also being able to determine the direction of a bright light. None of the subjects were able to perform form discrimination. YS, BG, SK and PS underwent cataract removal surgery and an intraocular lens implant. PK was provided with a corneal transplant. Post-treatment, subjects YS, BG, SK, PS and PK achieved resolution acuities for near viewing of 0.24°, 0.36°, 0.24°, 0.54° and 0.24°, respectively. Informed consent was obtained from all subjects (Supplementary Methods).

Our stimulus set comprised 20 pairs of simple three-dimensional forms drawn from a children's shape set (Fig. 1a). Each pair of stimuli was used only once for each condition. The choice of match and sample was randomized for each subject. The forms were large (ranging from 6 to 20 degrees of visual angle at a viewing distance of 30 cm) so as to sidestep any acuity limitations of the subjects. They were presented on a plain white background to avoid any difficulties in figure-ground segmentation.

Subjects were tested as soon as was practical after surgery of the first eye (in all cases, within 48 h of treatment) and performed a match-to-sample task. One sample object was presented either visually or haptically, followed by the simultaneous presentation of the original object (target) and a distractor in the modality matching the condition in the diagram (Fig. 1b). The subjects' task was to identify the target.

By 2 d after treatment, all subjects performed near ceiling for the touch-to-touch condition (mean, 98%) and the vision-to-vision condition (mean, 92%), indicating that the stimuli were easily discriminable in both modalities (Fig. 2a). In contrast, performance fell precipitously in the touch-to-vision condition, where performance was near chance level (mean, 58%) and significantly different from touch-to-touch and vision-to-vision performance (P < 0.001 and P < 0.004, respectively).

We had the opportunity to test three of the five subjects on later dates, using novel, but similar, stimuli to avoid object-specific experience as a confounding factor. Notably, performance in the touch-to-vision condition with novel test objects improved significantly (P < 0.02) in as little as 5 d from the initial performance test post-treatment, given only natural real-world visual experience (Fig. 2b). Subjects were given no training during the intervening period.

Our results suggest that the answer to Molyneux's question is likely negative. The newly sighted subjects did not exhibit an immediate transfer of their tactile shape knowledge to the visual domain. This finding has important implications for bimodal perception. Whatever linkage between vision and touch may pre-exist concomitant exposure of both senses, it is insufficient for reconciling the identity of the separate sensory representations. However, this ability can apparently be acquired after short real-world experiences. An alternative explanation to the progression in haptic-visual cross-modal abilities is a rapid increase in the visual ability to create a three-dimensional representation, thus allowing for a more accurate mapping between haptic structures and visual ones. This seems to run counter, however, to the observed slow progression of visual parsing capabilities in other studies, which argue that this kind of learning requires many months, rather than days. We instead favor an account that relies on strategies using two-dimensional features, such as corners, edges and curved segments, that would be apparent across both domains. However, some important questions remain open. For instance, would the newly sighted have shown an immediate transfer from touch to vision if they possessed three-dimensional visual representations right from sight onset? Also, can cross-modal mappings emerge after sight onset with experience of independent, but not correlated, data across the two modalities?

The rapidity of acquisition suggests that the neuronal substrates responsible for cross-modal interaction might already be in place before they become behaviorally manifest. This appears to be consistent with recent neurophysiological findings documenting neurons that are capable of responding to two or more modalities even in cortical regions devoted mainly to only one modality. Also notable are demonstrations from human brain imaging studies that multi-modal responses in primary sensory areas of the cortex can be elicited rapidly during unimodal deprivation, consistent with our findings of a short time course of cross-modal learning. We recently proposed a candidate model of cross-modal mapping and others have shown that the statistical properties of the visual environment are conducive to this form of learning.

It is interesting to speculate on the possible ecological importance of a learned, rather than innate, mapping between vision and haptics. A dynamic mapping based on experience would indeed be preferred if the representations of the visual and haptic features are not entirely predictable in advance of experience. The representation of haptic features, for instance, may change as the body undergoes physical alterations throughout development, requiring updated correspondences between physical features and proprioceptive feedback. In vision, improvements in acuity and object segmentation strategies throughout the first year of infant development may require new representations for features that were not perceivable previously. Even in adulthood, studies with individuals with late-onset vision have suggested that the ability to form representations of new features is retained. If the representations of visual and haptic features are indeed acquired through experience, and perhaps even change throughout life, a dynamic mapping may be the most practical method of achieving cross-modal integration.

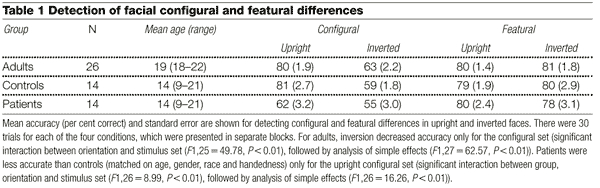

Figure 1: Stimuli and testing procedure.

(a) Four examples from the set of 20 shape pairs used in our experiments.

(b) The match-to-sample procedure.

The within-modality tactile match to tactile sample task assesses haptic capability and task understanding.

The visual match to visual sample task provides a convenient way to assess whether subjects' form vision is sufficient for visually discriminating between test objects.

The tactile match to visual sample task represents the critical test of intermodal transfer.

T = touch

V = vision

s = sample

d =distractor

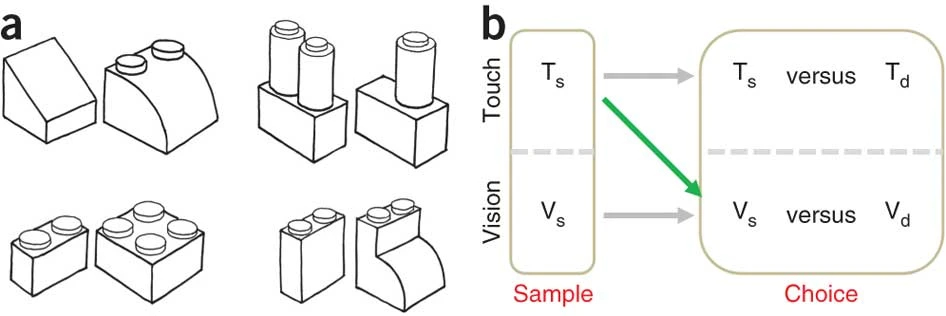

Figure 2: Intra- and inter-modal matching results.

(a) Within-modality and cross-modality match to sample performance of five newly sighted individuals 2 d after sight onset.

Newly sighted subjects exhibited excellent performance on the touch-to-touch (T-T) and vision-to-vision (V-V) tasks, but were near chance on the transfer (T-V) task.

For each of the touch-to-touch and vision-to-vision sessions, P < 0.003 (two-tailed binomial test).

For each of the transfer sessions, P > 0.25. “Average”, average performance across subjects. P* < 0.05.

(b) Visual match to tactile sample performance of three subjects across two post-operative assessments.

Subjects exhibited significant improvement in cross-modal transfer a short duration after the first assessment.

For each of the first transfer sessions, P > 0.25 (two-tailed binomial test).

For each of the follow-up sessions shown above, P < 0.015.

2 - Early visual experience and face processing

Abstract

Adult-like expertise in processing face information takes years to develop and is mediated in part by specialized cortical mechanisms sensitive to the spacing of facial features (configural processing). Here we show that deprivation of patterned visual input from birth until 2–6 months of age results in permanent deficits in configural face processing. Even after more than nine years' recovery, patients treated for bilateral congenital cataracts were severely impaired at differentiating faces that differed only in the spacing of their features, but were normal in distinguishing those varying only in the shape of individual features. These findings indicate that early visual input is necessary for normal development of the neural architecture that will later specialize for configural processing of faces.

Main

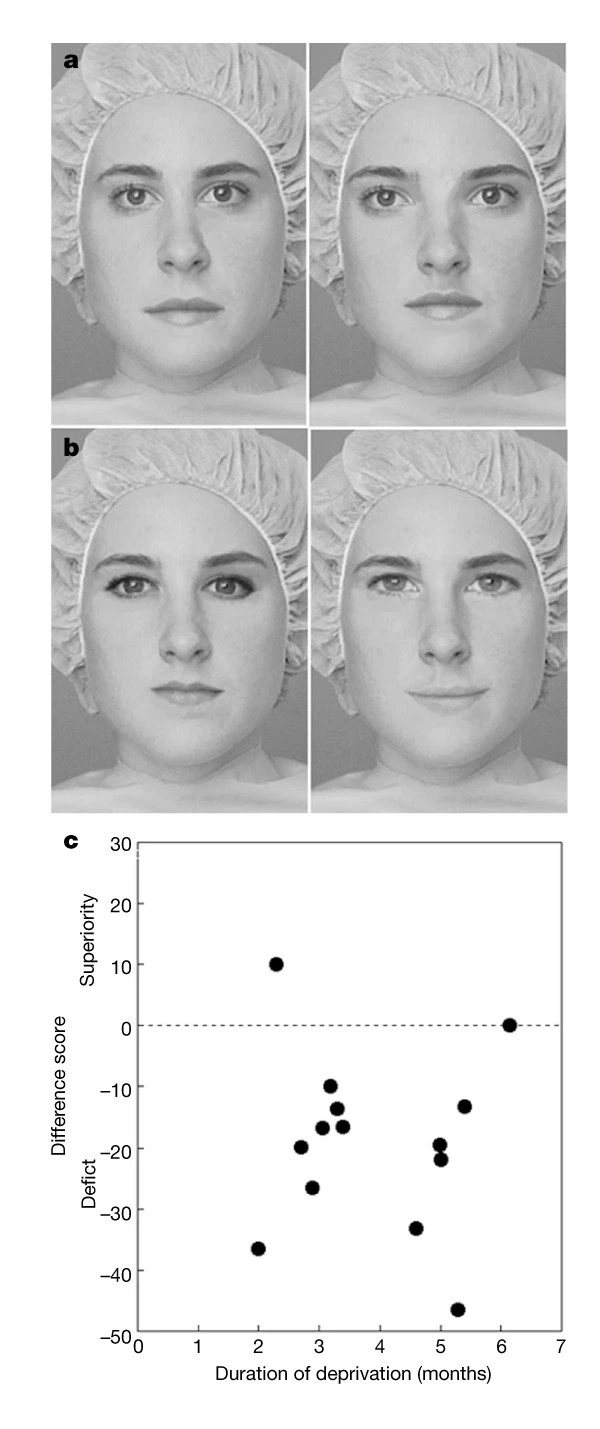

For face recognition, subtle differences in the shape of specific features (featural information) and/or in their spacing (configural information) must be encoded. We created two sets of faces that differentiated configural from featural processing (Fig. 1a, b). We asked 26 normal right-handed adults to view the faces from each set binocularly to decide whether they were the same or different when upright and inverted. Adults were equally accurate in differentiating faces from the two sets in their canonical upright position (Table 1). Inverting the faces decreased adults' accuracy for the configural but not the featural set, consistent with previous findings.

We used these stimuli to test face processing in 14 patients (6 male; 13 right-handed; 11 caucasian) born with a dense central cataract in each eye that prevented patterned stimulation from reaching the retina. After removal of the natural lens, an optical correction was fitted to focus visual input (mean duration of deprivation, 118 days from birth; range, 62–187 days); patients had had at least nine years of visual experience after treatment before testing and, when necessary, wore an additional optical correction to focus the eyes at the testing distance.

Compared with age-matched normal subjects, the deprived patients distinguished faces from the featural set normally, but were significantly impaired for the configural set of upright faces (Table 1). Performance was not related to the duration of the deprivation for either set (P > 0.10; Fig. 1c). There was no correlation between acuity (range from 20/25 to 20/80 in the better eye; median, 20/40) and patients' accuracy on either set (P > 0.10).

Our results indicate that visual experience during the first few months of life is necessary for the normal development of expert face processing. Because normal infants have poor visual acuity, their cortex is exposed only to information of low spatial frequency which, for faces, specifies the global contour and location of features but little of their detail. This early information sets up the neural architecture that will specialize in expert configural processing of faces over the next 10–12 years. When visual input is delayed by as little as two months, permanent deficits result.

Patients performed normally in a different task requiring the discrimination of geometric patterns based on the location of an internal feature. This suggests that deficits in configural processing may be restricted to the processing of faces, as expected from evidence that normal adults use separate systems for processing face and non-face objects.

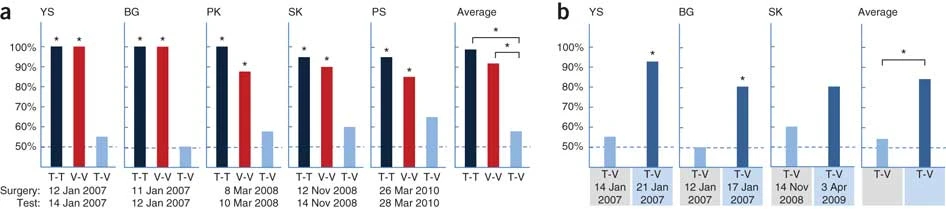

Figure 1: Measurement of configural versus featural processing.

Examples of stimuli from :

a, the configural set (created by moving the eyes and mouth)

b, the featural set (created by replacing the eyes and mouth).

On each trial, one of the five possible faces appeared for 200 ms, and following an interstimulus interval of 300 ms, a second face appeared until the subject used a joystick to signal a 'same' or 'different' judgement.

c, Patients' performance on the upright configural set plotted as a function of the duration of deprivation from birth.

Each circle represents the difference between the accuracy (per cent correct) of one patient and his/her aged-matched control.